Figure 2—figure supplement 4.

- ID

- ZDB-FIG-201209-38

- Publication

- Segebarth et al., 2020 - On the objectivity, reliability, and validity of deep learning enabled bioimage analyses

- Other Figures

-

- Figure 1—figure supplement 1.

- Figure 1—figure supplement 1.

- Figure 1—figure supplement 2.

- Figure 2—figure supplement 1.

- Figure 2—figure supplement 1.

- Figure 2—figure supplement 2.

- Figure 2—figure supplement 3.

- Figure 2—figure supplement 4.

- Figure 3—figure supplement 1—source data 1.

- Figure 3—figure supplement 1—source data 1.

- Figure 4—source data 2.

- Figure 5—figure supplement 1.

- Figure 5—figure supplement 1.

- Figure 5—figure supplement 2.

- Figure 5—figure supplement 3.

- Figure 5—figure supplement 4.

- Figure 5—figure supplement 5.

- All Figure Page

- Back to All Figure Page

|

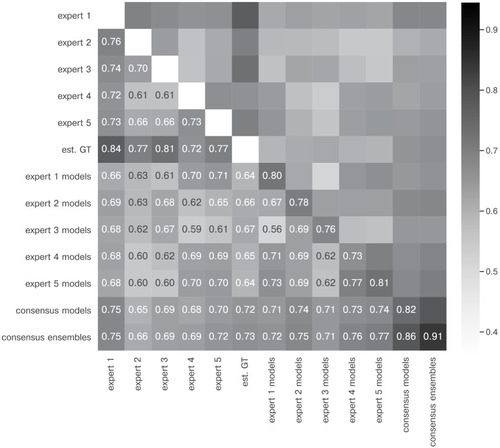

The heatmap shows the mean of M̄IoU for the image feature annotations of the indicated experts. Segmentation masks of the five human experts (Nexpert = 1 per expert), the estimated ground-truth (Nest. GT = 1), the respective expert models, the consensus models, and the consensus ensembles (Nmodels = 4 per model or ensemble) are compared. The diagonal values show the inter-model reliability (no data available for the human experts who only annotated the images once). Again, consensus ensembles show highest reliability (0.91). Est. GT annotations are directly derived from manual expert annotations, which renders this comparison favorable. |