- Title

-

PiVR: An affordable and versatile closed-loop platform to study unrestrained sensorimotor behavior

- Authors

- Tadres, D., Louis, M.

- Source

- Full text @ PLoS Biol.

|

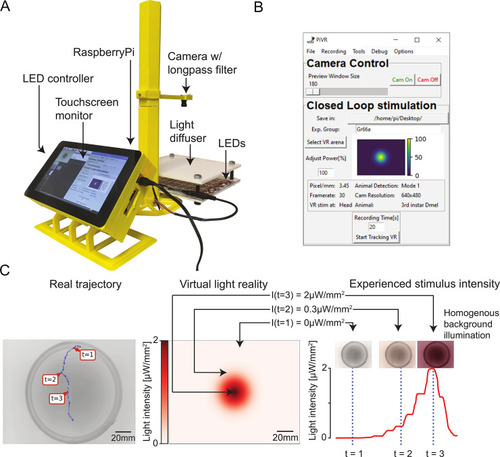

(A) Picture of the standard PiVR setup. The animal is placed on the light diffuser and illuminated from below using infrared LEDs and recorded from above. The Raspberry Pi computer and the LED controller are attached to the touch screen, which permits the user to interface with the PiVR setup. (B) Screenshot of the GUI while running a virtual reality experiment. The GUI has been designed to be intuitive and easy to use while presenting all important experimental parameters that can be modified. (C) Virtual realities are created by updating the intensity of a homogeneous light background based on the current position of a tracked animal mapped onto a predefined landscape shown at the center. (Center) Predefined virtual gradient with a Gaussian geometry. (Left) Trajectory of an unconstrained animal moving in the physical arena. (Right) The graph indicates the time course of the light intensity experienced by the animal during the trajectory displayed in the left panel. Depending on the position of the animal in the virtual-light gradient, the LEDs are turned off (t = 1) or turned on at an intermediate (t = 2) or maximum intensity (t = 3). GUI, graphical user interface; LED, light-emitting diode; PiVR, Raspberry Pi Virtual Reality. |

|

(A) |

|

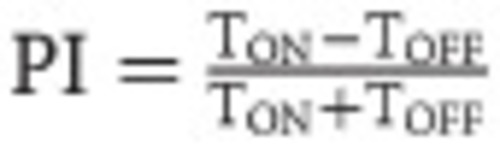

(A) Adult |

|

(A) Adult |

|

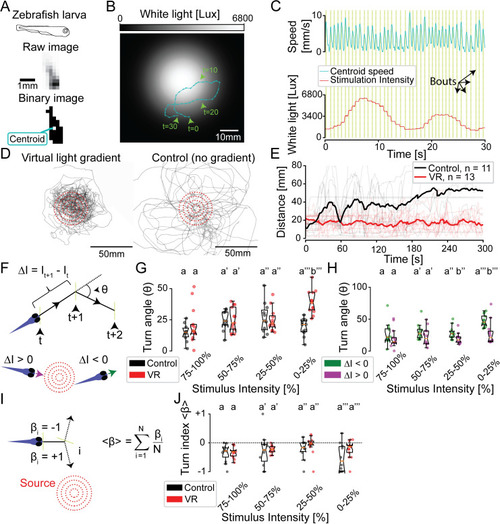

(A) Illustration of the identification of a moving zebrafish larva by PiVR. (B) Illustrative trajectory of a zebrafish larva in a virtual-light gradient having a Gaussian geometry. Panel C displays the time course of the speed and the white-light intensity during that trajectory shown in panel B. Yellow vertical lines indicate automatically detected bouts. (D) Trajectories of 13 fish tested in virtual-light gradient (left) and 11 fish in control (right). Red circles indicate 10-, 20-, 30-, and 40-mm distances to the center of the virtual-light source (see |